Digital audio at it’s most fundamental level is a mathematical representation of a continuous sound.

The digital world can get complicated very quickly, so it’s no surprise that a great deal of confusion exists.

The point of this article is to clarify how digital audio works without delving fully into the mathematics, but without skirting any information.

The key to understanding digital audio is to remember that what’s in the computer isn’t sound – it’s math.

What Is Sound?

Sound is the vibration of molecules. Mathematically, sound can accurately be described as a “wave” – meaning it has a peak part (a pushing stage) and a trough part (a pulling stage).

If you have ever seen a graph of a sound wave it’s always represented as a curve of some sort above a 0 axis, followed by a curve below the 0 axis.

What this means is that sound is “periodic.” All sound waves have at least one push and one pull – a positive curve and negative curve. That’s called a cycle. So – fundamental concept – all sound waves contain at least one cycle.

The next important idea is that any periodic function can be mathematically represented by a series of sine waves. In other words, the most complicated sound is really just a large mesh of sinusoidal sound (or pure tones). A voice may be constantly changing in volume and pitch, but at any given moment the sound you are hearing is a part of some collection of pure sine tones.

Lastly, and this part has been debated to a certain extent – people do not hear higher pitches than 22 kHz. So, any tones above 22 kHz are not necessary to record..

So, our main ideas so far are:

—Sound waves are periodic and can therefore be described as a bunch of sine waves,

—Any waves over 22 kHz are not necessary because we can’t hear them.

How To Get From Analog To Digital

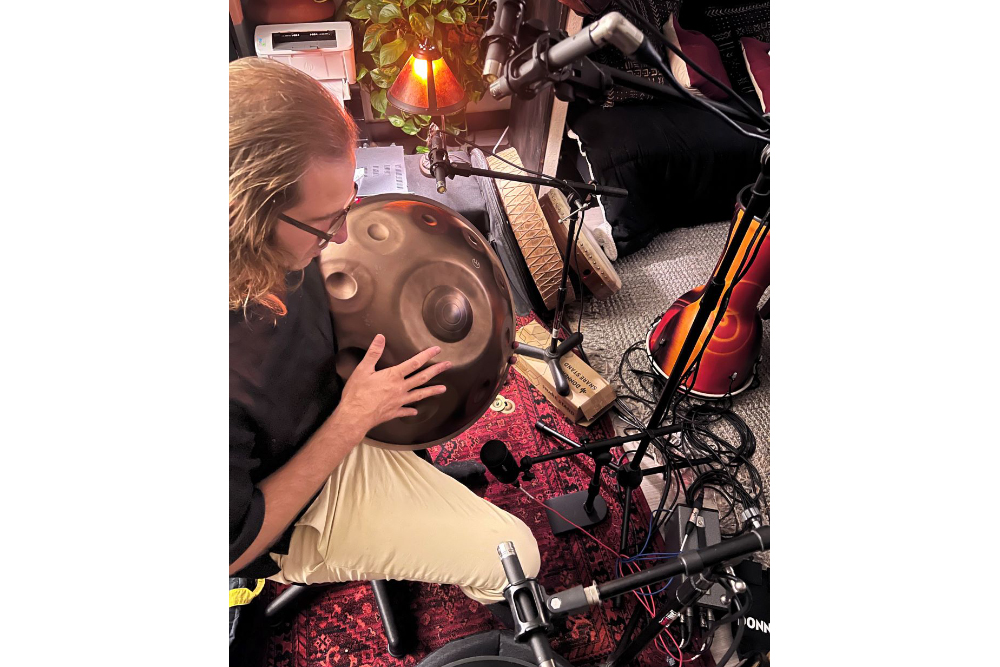

Let’s say I’m talking into a microphone. The microphone turns my acoustic voice into a continuous electric current. That electric current travels down a wire into some kind of amplifier then keeps going until it hits an analog to digital converter.

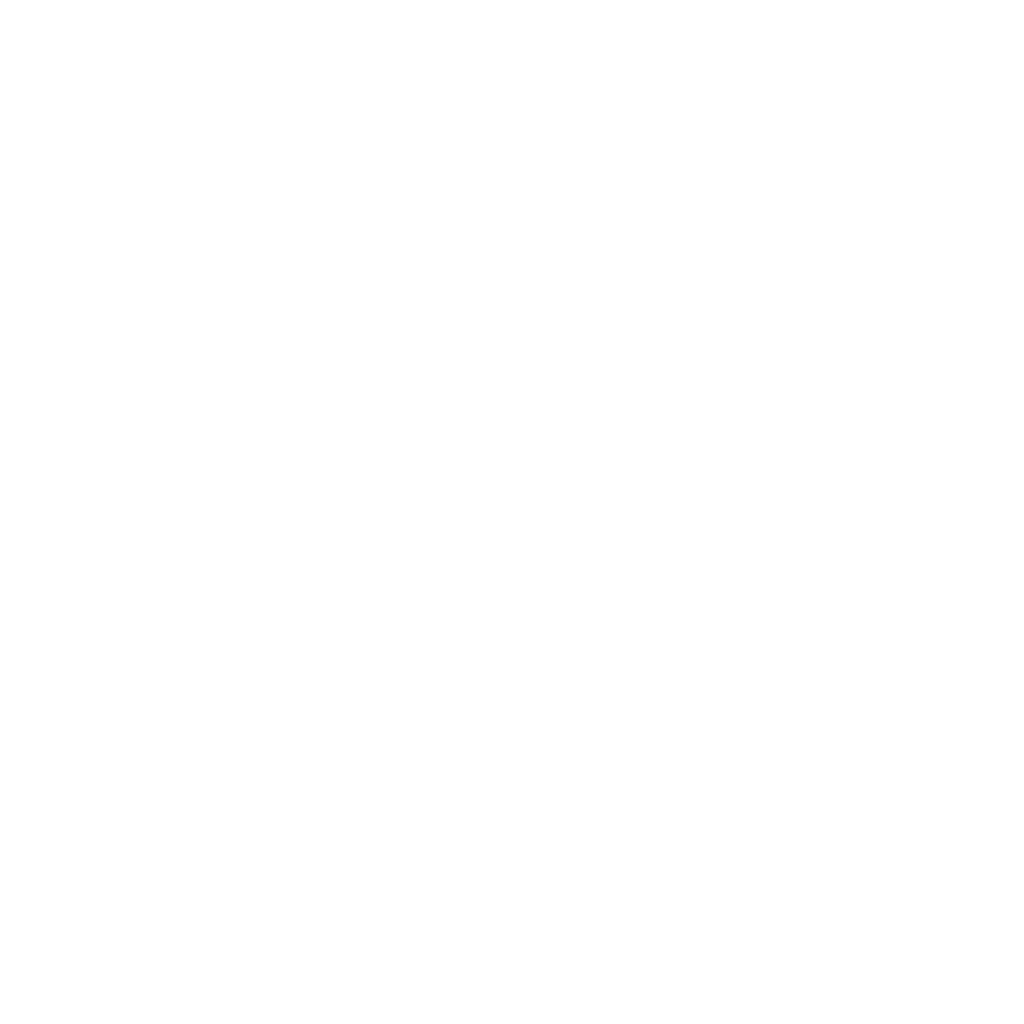

Remember that computers don’t store sound, they store math, so we need something that can turn our analog signal into a series of 1s and 0s. That’s what the converter does. Basically it’s taking very fast snapshots, called samples, and giving each sample a value of amplitude.

This gives us two basic values to plot our points – one is time, and the other is amplitude.

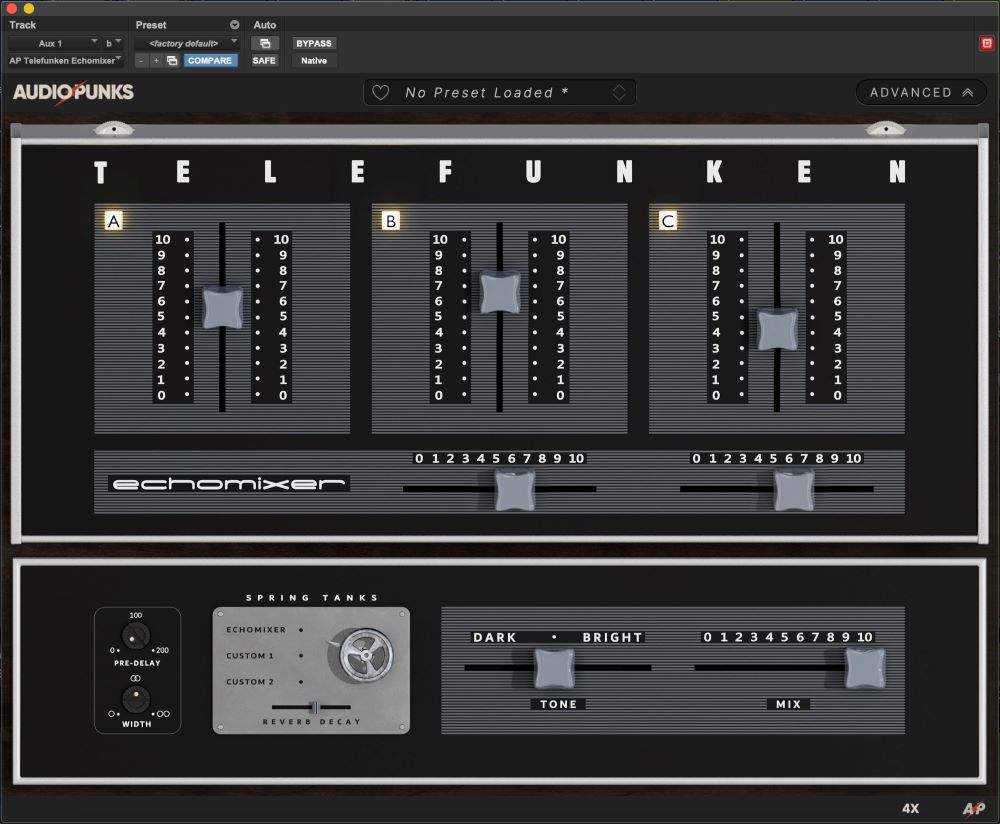

Resolution & Bit Depth

Nothing is continuous inside the digital world – everything is assigned specific mathematical values.

In an analog signal a sound wave will reach it’s peak amplitude – and all values of sound level from 0db to peak db will exist.

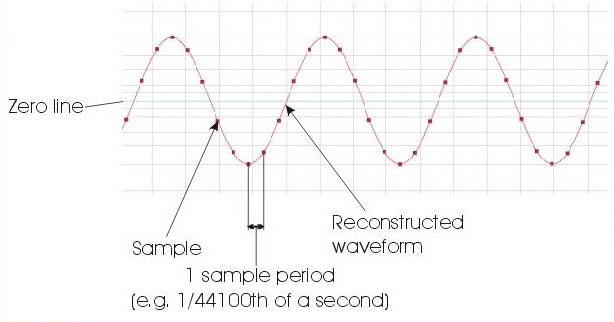

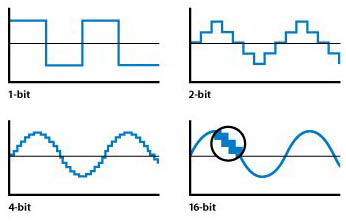

In a digital signal, only a designated number of amplitude points exist.

Think of an analog signal as someone going up an escalator – touching all points along the way, while digital is like going up a ladder – you are either on one rung or the next.

Let’s say you have a rung at 50, and a rung at 51. Your analog signal might have a value of 50.46 – but it has to be on one rung or the other – so it gets rounded off to rung 50. T

hat means the actual shape of the sound is getting distorted. Since the analog signal is continuous, that means this is constantly happening during the conversion process. It’s called quantization error, and it sounds like weird noise.

But, let’s add more rungs to the ladder. Let’s say you have a rung at 50, one at 50.2, one at 50.4, one at 50.6, and so on. Your signal coming in at 50.46 is now going to get rounded off to 50.4. This is a notable improvement. It doesn’t get rid of the quantization error, but it reduces it’s impact.

Increasing the bit-depth is essentially like increasing the number of rungs on the ladder. By reducing the quantization error, you push your noise floor down.

Who cares? Well, in modern music we use a LOT of compression. It’s not uncommon to peak limit a sound, compress it, sometimes even a third hit of compression, and then compress and limit the master buss before final print.

Remember that one of the major artifacts of compression is bringing the noise floor up! Suddenly, the very quiet quantization error noise is a bit more audible. This becomes particularly noticeable at the quietest sections of the sound recording – (i.e. fades, reverb tails, and pianissimo playing.)

A higher bit depth recording will allow you to hit your converter with more headroom to spare and without compression to stay well above the noise floor.