Editor’s Note: The original title of this commentary was “Acoustics 101 for Architects.” The author has recently learned that Acoustics 101® is a registered trademark of Auralex® Acoustics. As such, this commentary, with which Auralex isn’t connected, has been retitled. The actual Auralex Acoustics 101 publication may be accessed here (PDF).

This is the second edition of this commentary. It is tailored to the one group of people who have more influence over a building’s acoustics than any other: architects. The author presented the first edition in 2013 at the 166th meeting of Acoustical Society of America, in San Francisco, CA.

The subject is Architectural Acoustics, a field that is broader than most imagine. To do justice to the theme, we must briefly touch on many subordinate topics, most having a synergetic relationship bonding architecture and sound.

This examination is based on fundamentals and the author’s experience — not perfection. It covers many of the basics while also exploring several modern and esoteric matters as well. You will be introduced to interesting and analytical subjects; some you may know, some you may never have considered.

One might ask: Why offer this to the pro-audio community when the target audience is architects? The simple answers: Most pro audio practitioners have to work in acoustic environments that are out of their control because of past decisions made by an architect or owner. Also consider: There are probably many new and veteran sound techs that have yet to accrue a solid acoustical foundation. Most certainly, there are knowledge gaps on both sides of the designer/user relationship. When opportunities arise, this material will help almost everyone to better understand and discuss acoustics.

Because of its scope and length, this compendium is being serialized over the course of many issues. Hopefully it will be of interest to many, and as you follow along you’ll find value that can be shared with others, including the owners and architects you may interact with on a regular bases.

Here are a few examples of what you’ll find if you choose to join and stay with us on this acoustical tour:

- What is sound and why is it so hard to manage or control?

- The length of commonly-heard, low- and high-frequency sound waves vary by as much as 400:1. Why does this disparity matter?

- How and why do various audible frequencies behave differently when interacting with various materials, structures, shapes and finishes?

- Why there are no “one size fits all” solutions.

- There are three acoustical tools available to both the architect and the acoustician. What are they? How can they benefit or hinder the work of each craft?

- Large rooms vs. small rooms: How and why do acoustic challenges change as rooms become larger or smaller?

- Room geometry: Why some shapes are much better than others. Examples and explanations.

- Reverberation and echo: How do they differ? Which is better, or worse, and why? How much is too much, or too little?

- New reverberation design goals for 21st century performance venues.

- What is the Parametric Method of Acoustic Treatment?

- Speech intelligibility: We all know it matters. What can architects do to help or hinder?

- Three simple tests: Quick, easy ways to evaluate the basic acoustical merits of a room, without any fancy test equipment or training.

- Opportunities and tradeoffs: Blending architecture, acoustics and pragmatism.

This commentary has four main goals:

First – It will endeavor to put the essential terminology and concepts of Architectural Acoustics (AA) into the hands of the architect, owner, end user, or anyone else who would like to have a better understanding of this subject.

Second – It will introduce the incredible dimensional range of wavelengths that exist between the lowest and highest audible sounds. Once defined and established, we can overlay this spatial understanding of sound waves onto the physical structures they touch.

Third – It will help architects better understand and visualize the relationship between the physical shapes, textures, and dimensions of their work, and why those design decisions impact the qualitative behavior of sound. For example: Architecture requires three-dimensional thinking and implementation; yet sound is a four-dimensional experience that must coexist within a three-dimensional structure. The fourth dimension, time, being significant because the speed of sound is so slow that the size and shape of a built environment can often dictate the quality and clarity of all sounds within.

Fourth – It will explain the importance of interior symmetry. Sound propagation is an amazingly flexible phenomenon, but not something that is easily controlled once a group of sound waves begin to move in any particular direction. As a result, general building symmetry – and even more importantly, seating symmetry – is an extremely important component related to achieving high-quality dispersion and reception of sound.

It’s easy to think that sound is completely removed from the fundamental principles of architecture. Nothing could be further from reality. Consider this: If a person were seated in an amazingly beautiful structure at night — one without illumination — they would see few, if any, of the features, shapes, materials, colors, textures, or workmanship that was created. However, given that same dearth of light, all audible sounds remain unaltered, for better or worse.

This issue covers these first three Sections of the subject matter:

- Architectural Acoustics Defined

- What is Sound?

- Sound Propagation

1.0. ARCHITECTURAL ACOUSTICS DEFINED

1.1 Architectural Acoustics can be defined as the science, study and application of acoustic principles as they are implemented inside a building or structure. In the context of this paper the terminology focuses on built environments that will be used for live, amplified, creative performances, or the audible presentation of other useful information. In other words, medium and large room acoustics. To a lesser extent, small room acoustics will be covered as well.

1.2 While AA includes topics such as noise control, and managing sound transmission in and out of a room or building, the main focus is managing reverberation and resonances that directly affect the quality of sound in any venue.

A. Too much reverberation causes speech and music clarity to suffer greatly. If there’s too little, things like acoustic instruments, musical vocals, and congregational singing are dulled, lifeless, or unblended – like they often are when performing outdoors.

B. Room resonance is often problematic at the lower end of the frequency spectrum, but can rise up into the low-mid speech frequencies in small rooms.

1. All rooms will sympathetically resonate when stimulated with sound that has wavelengths that match any or all its major room dimensions.

2. The terminology for this resonance includes: room modes, eigentones, or standing waves. All three are synonymous. More on this in later sections.

1.3 AA and sound have an interdependent relationship. Once established by design, right or wrong, they are inseparable. They coexist as a bidirectional, cause and effect engine.

2.0 WHAT IS SOUND?

2.1 Have you ever stopped to consider just what sound is? Of our five senses, hearing seems to be the one receiving the least consideration, though some modern research [1] suggests it’s the most important for our overall wellbeing and lifestyle. Why then do our listening environments garner so little attention and respect? The answer is at least three-fold.

A. Sound and acoustics are relatively complex subjects that are poorly understood by most people.

B. Sound systems and acoustic treatments must be customized for each room. There’s no such thing as a “one size fits all” solution. To reach a room’s desired audio quality and clarity, different sizes, shapes, locations, and quantities of loudspeakers and acoustic finishes are required.

C. Quality loudspeakers and acoustic treatments are often perceived as being expensive, bulky, and/or generally unsightly. While historically true, at least the size and appearance of these products are becoming more aesthetically appealing.

2.2 Sound is not a tangible thing, it’s a transformative experience.

A. Sound is a relatively slow-motion, push/pull, molecular chain reaction that starts with a simple oscillation or vibration. These vibrations are created by any number of animals or objects.

B. The vibrations become a sound we can hear if they stimulate the nearby air molecules with enough energy to be detected. Given optimal conditions, the audible spectrum for humans is generally considered to be 20 Hz to 20,000 Hz.

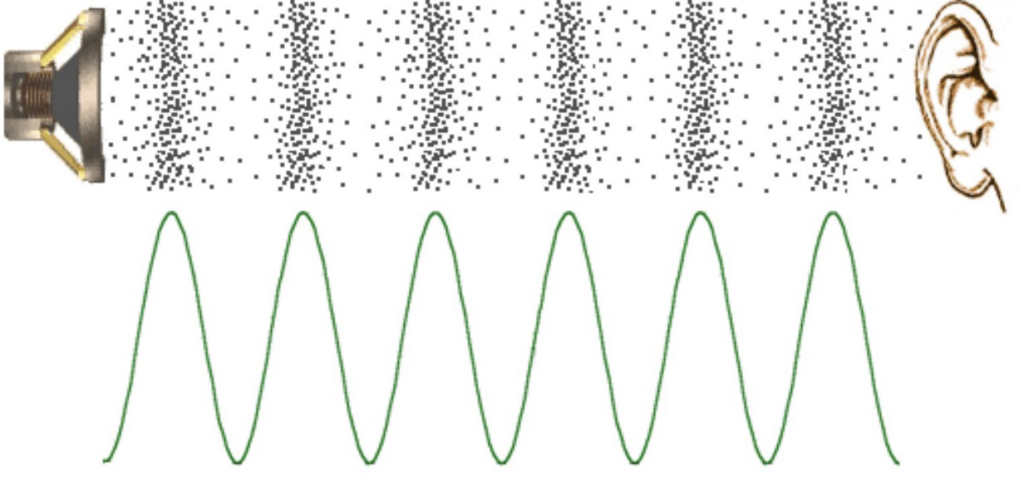

C. As an object vibrates, it pushes the neighboring air molecules out of its way. The molecules don’t really move much, they squeeze together, then return to their original position, creating small areas of higher and lower air pressure (Figure 1).

1. On the opposite side of the pushing motion, the corresponding “pull” causes the air pressure to decompress a little, effectively lowering the nearby pressure.

D. The stronger, or more violent, the oscillating motion, the greater the change in pressure. This results in a louder version of the vibration or sound.

E. Barring obstruction, these tiny changes in air pressure move away from the source of the vibration(s), and travel through the air rather slowly, until they strike another set of objects that vibrate sympathetically. Those sympathetically-vibrating objects are our eardrums.

F. In its most rudimentary form, the transformative circuit that culminates in audible sound looks like this: A vibrating object > a chain reaction of air molecules pushing toward, and pulling away from one another > one or more sympathetically-vibrating eardrums > brain > human response.

G. The experience: Sound is created when air molecules are pushed around and perceived by our ear/brain system. The individual air molecules make no audible sound.

2.3 Sound can also be thought of as a force, somewhat like gravity or magnetism. But unlike gravity with its ubiquitous downward force, or magnetism with its “sticky” bi-polar forces, think of sound as a force that generates molecular chain reactions. When man-made and intentional, a force that can easily be activated or deactivated, when and if required.

A. Intentional sound waves can easily move through air in any direction; can be manipulated and steered into relatively definable areas; and with proper care and handling, are generally adaptable and responsive to our needs.

B. However, sound waves cannot be bent around corners, or penetrate solid objects, without significant quality, quantity, and/or temporal changes; usually unwanted.

1. When sound waves bend around objects or corners, its call diffraction.

2. When sound waves strikes solid objects, some of that energy may be transferred, via structure-borne vibrations, and plague noise isolation efforts.

2.4 There must be some type of compressible medium for sound to exist. It doesn’t matter what type of medium or atmosphere, as long as there are molecules that can be pushed around, or stimulated into distinct patterns of greater and lesser pressure.

A. Water is a good example of a medium that easily transmits sound. So are steel and concrete under the right conditions.

B. A vacuum has no atmosphere therefore, sound does not exist in a vacuum.

2.5 Frequency, wavelength, and the speed of sound:

A. Sounds can be defined by either their “frequency” or “wavelength”. Every discrete frequency has a corresponding wavelength. While frequency and wavelength are directly correlated, and interchangeable, each word defines something different.

1. When identifying a specific frequency, in cycles per second, the unit of measurement is Hertz (after Heinrich Hertz), which is abbreviated Hz.

a. To say that a sound has a frequency of 500 Hz means something is vibrating, back and forth, 500 times each second.

B. As any discrete vibrating frequency is produced, and propagates or moves outward at the speed of sound, the linear distance required to complete one full, sinusoidal wave cycle, is it’s wavelength.

1. Thus, the wavelength of a pure 500 Hz tone is about 2.25′, and a 2.25′ wave is a 500 Hz tone. They are identical in size and sound.

C. When traveling through air, the speed of sound is a “generalized” constant. The number used here, or referenced throughout, is 1,127 feet per second (FPS). This number will fluctuate slightly based on temperature and humidity, but not enough to be meaningful in the context of this tutorial.

1. The complex waves of music and speech all travel through air at the same speed, regardless of frequency.

2. If you’re interested, the math conversion from frequency to wavelength is very simple: Divide the speed of sound (1,127) by whatever frequency you are interested in. For example: 1,127 FPS ÷ 320 Hz = 3.52’.

D. Now compare the speed of sound, at 1,127 FPS, to the speed of light, which is 186,000 miles per second.

1. Because the wavelengths of visible light are so small, directing, shaping and containing light is many orders of magnitude easier than shaping, containing and controlling sound. Why? Because the longer the wavelength, the more difficult it is to control, dissipate, and/or stop, once it’s in motion.

2. It’s relatively easy to completely block, or limit the visibility of any unwanted light. One way is to simply close a door between two rooms. If needed, and with little effort or specialized planning, the light in room A can be completely eliminated from room B.

3. This same exercise is much less effective if you want to block, or limit, some or all audible sounds between the two rooms. Low- and mid-frequency sounds can easily penetrate doors and walls. Speech privacy between two rooms is a perfect example.

4. Only with careful structural planning, and/or specialized acoustic treatments, can we completely stop and/or block all sound.

E. The understanding of how frequencies relate to wavelengths is the critical link between sound, acoustics, and architecture. Once sound is translated into physical dimensions that architects or designers can easily understand, it begins to unlock the mystery of why effective acoustic treatment needs to be applied in so many sizes and shapes.

3.0 SOUND PROPOGATION

3.1 Desired sound, not random noise, can be propagated or dispersed with some reasonable control. This is primarily done via the use of specific loudspeaker types and technologies.

A. Once again, the wavelength of various sounds largely limits how much control is available. In the lower frequency spectrum, sound eminating from instruments and/or loudspeakers is generally omni-directional. As frequencies increase in pitch, wavelengths get smaller and smaller, and more directional control can be gained.

B. 500 Hz is a good point of reference. Amplified sound – at or above 500 Hz (2.25’ wavelength) – can often be directionally controlled. Controlling the direction of sound waves becomes more and more difficult for frequencies below about 500 Hz. Difficult, but not impossible.

3.2 Sound wave modeling:

A. Sound travels through air in longitudinal waves. While these waves may not be too difficult to visualize at the most basic level, their reflective behavior can be quite difficult to accurately calculate and predict in three-dimensional space.

B. AA correlates the myriad frequencies and wavelengths of audible sound, with the complexities of architectural geometry, and the influences of multiple finish materials. Together, these factors are very difficult to compute using manual methods.

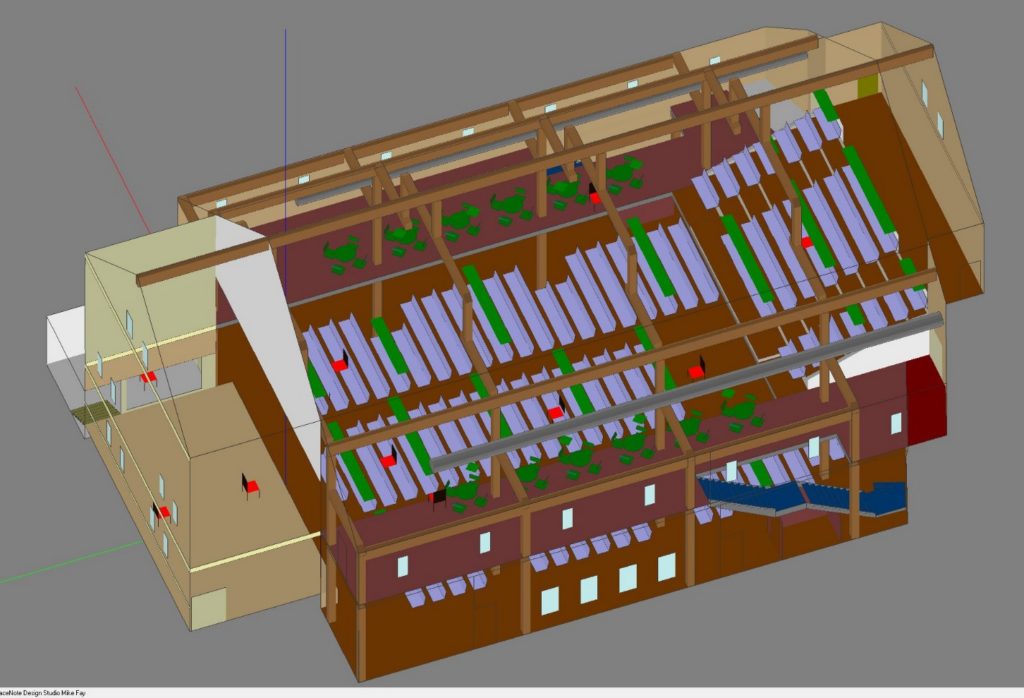

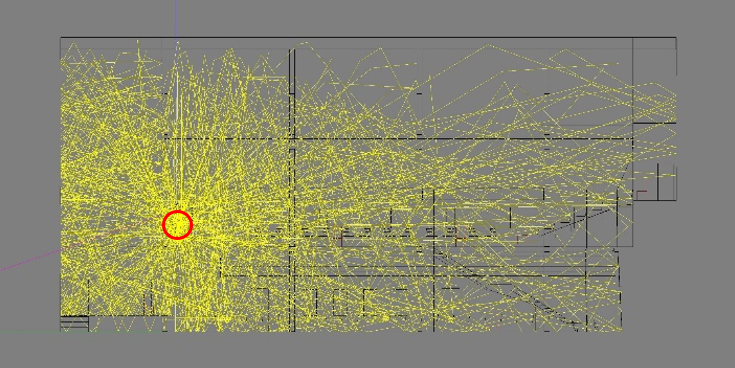

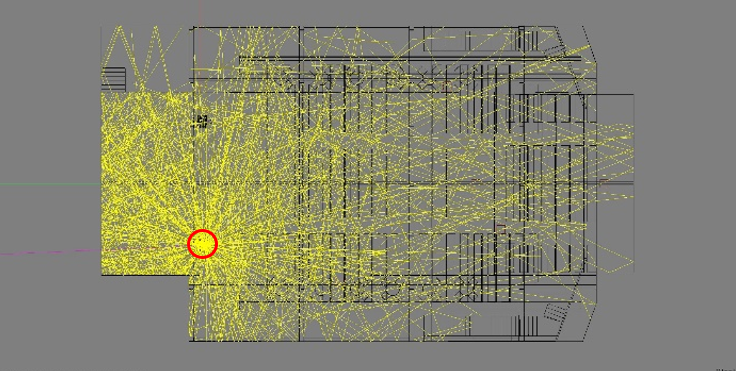

C. Today, we rely heavily on computer-based calculations and simulations to help model (Figures 2-4) and solve for the most critical and complex acoustical questions.

D. “Ray tracing” theory and software algorithms are used to model the behavior of sound as it radiates from a virtual sound source, and bounces around in a fully-enclosed 3D computer model.

1. The virtual rays that are used in modeling programs can be thought of as discrete beams of light. Imagine a star burst of light beams, with each beam expanding out from a virtual source; like a large fireworks artillery shell bursting in the night sky.

a. Most modeling programs emit these beams at a spherical density of between 1 and 5 degrees of separation between each beam.

E. These virtual rays can effectively approximate the behavior of direct and reflected sound as it travels through air. But there’s a catch:

1. Ray tracing is best used to represent only a portion of the audible frequency spectrum. More specifically, only about 6 of the 10 total octaves of audible sound (between 320 Hz and 20 kHz) can be modeled with reasonable accuracy.

a. When using ray-tracing techniques, accurately modeling frequencies between 20 Hz and 320 Hz is questionable, primarily because the reflected sound energy is moving into the modal (room resonance) frequency regions. The modeling software becomes less and less accurate and reliable.

b. For reference, those wavelengths are 56.35’ (this is not a typo) for 20 Hz, and 3.52’ for 320 Hz.

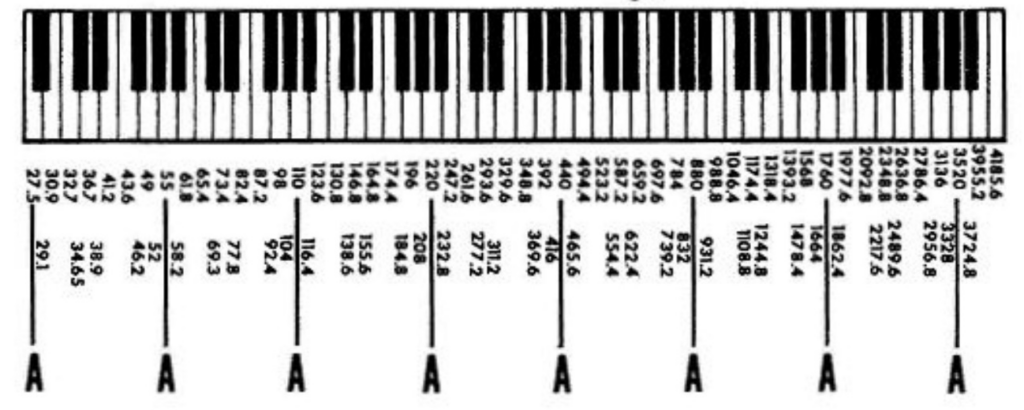

F. An “octave” is a musical term. All musical notes have a correlated, fundamental frequency and wavelength. When any musical note is halved or doubled, its corresponding frequency and wavelength is halved or doubled. These are octave intervals (Figure 5).

1. An octave below A440 is A220. An octave above is A880. The numbers represent the fundamental frequency of these three musical notes. And, each has a corresponding wavelength that is doubled or halved in wavelength size.

a. The wavelength of A440 is 2.56’. A220 is 5.12’, and A880 is 1.28’.

b. Across just these three octaves, the wavelengths vary by a factor of 4 — between the highest and lowest note.

3.3 Delivery methods:

A. There are three primary, yet distinctly different, methods of transmitting the sounds (propagation) of a performance or presentation to a live audience. They are:

1. Acoustically – with no electronic reinforcement.

a. Pure acoustic sound propagation is generally reserved for very sophisticated rooms and end users. Symphonic concert halls come to mind. These rooms require very strict design criteria and construction techniques, and though important, are not the primary focus of this commentary.

2. Point source, or line array, sound reinforcement systems.

a. This commentary is written with these reinforcement systems clearly in mind, as they represent the most commonly-specified technologies used in facilities that seat more than about 50 people.

b. These are also the systems that are capable of producing the widest frequency response, the greatest amount of power and volume, and that will generally benefit most from being installed in a good acoustical environment.

3. Overhead, distributed loudspeaker systems.

a. These too are common systems, but are typically specified to support background music, light foreground music, and/or speech-only sound reinforcement.

b. These systems are often specified when more limited frequency response, and/or power requirements are required.

c. The overhead, distributed loudspeaker system is often the most effective approach when a low (≤ 15’), flat ceiling is specified.

B. There are other delivery methods, but they usually operate independently of a room’s architectural acoustics.

FINAL THOUGTHS

That’s it for this issue. Hopefully, you learned a few things you didn’t already know, and are looking forward to what comes next.

For fully-enclosed venues, sound exists within the constraints of the physical dimensions of space, plus time. Yet when isolated to any discrete listening position, it cannot be easily defined by height, width, depth, mass, time, color, smell, or taste. As a result it’s often underappreciated, and/or ignored, as something of critical importance within the three-dimensional confines of a building.

If you remember nothing else from this series, please remain focused on this: For better or worse, every architectural shape, feature, location, and finish influences sound behavior and quality. When and where needed, almost all light is welcome; many sounds are not.

References

[1] https://www.acousticbulletin.com/our-visual-focus-part-2-the-eye-versus-the-ear

Peer Review Team

A special thank you goes out to Neil Shade (Acoustical Design Collaborative, Ltd), and Charlie Hughes (Biamp and Excelsior Audio Labs) for assisting with notes and comments that made this work presentable.