Setting Priorities

In some cases, it may be useful to ask the user for absolute values (i.e., consistency of SPL within the audience area) but even these must be restricted in some way (for example, it is not possible to achieve +/- 0.00 dB variation for all frequencies with a finite-size source).

More often than not, what’s most useful is an understanding of relative priority; normalized, unitless markers of what the user considers most important.

Think of it as sending a friend to an unfamiliar market to do your shopping for you.

A typical dialogue might go like this:

Person 1: Hey, can you pick some milk up from the market for this recipe?

Person 2: Sure, how much should I get?

Person 1: As much as you can for Y dollars.

Person 2: As much as I can? You don’t care about the quality?

Person 1: Well, actually I’d prefer organic milk.

Person 2: So as much organic milk as I can get for Y dollars. What percent fat?

Person 1: Oh, right. It should be T% fat. But it’s most important that it doesn’t cost more than $Y, and is organic. Just make sure you get at least Z gallons, since that’s what the recipe I’m making calls for. Well, maybe you can go a dollar or two over but only if you get the better milk for that.

Person 2: OK, is there anything I should avoid?

Person 1: Just don’t get Brand Q – that was terrible last time we had it. If only Brand Q is available for Y dollars, get Brand H, even if it’s more expensive by 1 or 2 dollars. But if it’s more than Y+1 or 2 dollars, it’s OK to get non-organic.

Person 2 (confusedly): OK, I’ll try to remember all of that…

This may seem like an absurd example of an optimization problem, but it is in fact not so unusual. In fact, the number of variables in this example (cost, volume, brand, quality and percentage fat) are generally far fewer than a sound system prediction tool has to handle.

Yet with a similar amount of input from the user, the program needs determine the best result in the least time. This means that the user input must be highly targeted, to steer operators to accurately characterize their goals, but without asking for too much absolute specificity unless actually required (i.e., to satisfy the noise police, who are holding calibrated absolute SPL meters).

Thus there is a particularly significant emphasis on the user interface, as it is the user’s only means to access the optimization system. If the system is unable to accurately characterize the user’s priorities, the result will not be consistent with the user’s goals and will require the input parameters to be slightly adjusted and tried again (these iterations on top of the iterations that the optimization routine performs internally). But the patience that users in the working world will have for this is limited.

On the opposite side of the coin, an interface that asks the user for excessive detail only bogs both user and computer down, offsetting the advantages and convenience that an optimization offers in the first place (and likely not providing any better results) and establishing highly specific expectations that may or may not be achievable. The interface must walk a fine balance; it is unquestionably the lynchpin of this technology.

What This Means Today

Having looked at the input and output parameters in the optimization model and the particular emphasis that this places on the user interface, perhaps it’s a good time to step back and identify what all of this means from the perspective of the end-user.

On a day-to-day basis, what does this really change when it comes to setting up sound reinforcement systems? The answer is not necessarily simple: in some ways, a lot, and in some ways, very little.

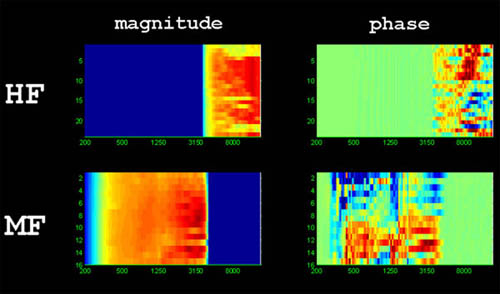

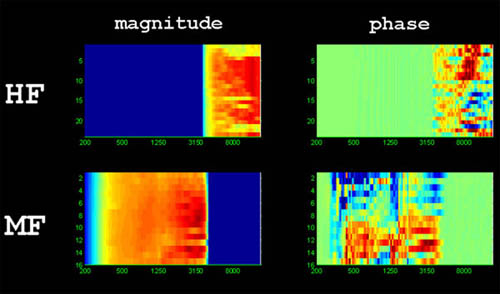

To start with, users wishing to fully leverage the benefits of numerical optimization must dispense with the “point-and-shoot” mentality, and an examination of the graphic directly below (which illustrates the magnitude and phase response per array element, by frequency) should sufficiently prove this.

Practically speaking, there is no way to manually interpret or validate the output data, especially when it combines mechanical (i.e. splay angle and trim height) and electronic (i.e., signal processing per zone or array element) components. Rest assured, this makes the operators no less in control or aware of the workings of their system; instead, it frees them to think about the higher-level priorities. But clearly, the nature of the operator’s involvement must change.

A different approach is also necessary in troubleshooting ‘optimized’ systems, because the typical signs of ‘trouble’ (polarity inversions, level shift, drastic equalization, etc.) may very well be required to produce the desired performance result. What this means is that the manufacturers must implement diagnostic systems to check the basic parameters, allowing the user to understand the system’s status on a much higher level without requiring the operator to examine the system on the granular level as in years past.

In other words, the operator can never be left to wonder if all of the drivers have been correctly wired, if any are blown, or if the optimization result has an error – the system must be able to determine this for itself, and report back to the operator on both the diagnosis and prognosis. And equally important, the user must be able to place some trust in this diagnostic system, and the results of the optimization engine.