Reply by KSTR

This simple sentence contains a whole lot of wisdom…

Time discrete sampling is made to transfer as much information as possible, not to give as much information as possible in directly readable numbers.

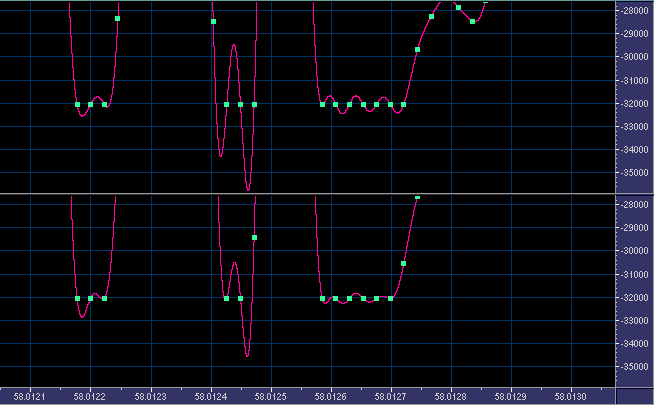

Just for the record, here (Figure 2) an example of IS-overs of a real recording (a well known specimen of the loudness-race variety):

At this particular point (arbitrarily chosen), “only” ca. +1dBFS—from what CoolEdit’s reconstruction tells, I didn’t try manual upsampling (but I did this with Bruno’s worst-case example once, and got, depending on which upsampler I used, peaks of 10dB and above).

This leads to a question: Don’t these reconstruction level meters work internally with upsampling, which, as far as I understand, is accomplished by interpolating the “missing” samples? Therefore, in theory, working with extreme upsampling (say, above fs=500k or so) should give enough margin to avoid IS-overs, assuming one leaves some reasonable safety margin.

Put in other words, what is the difference in algorithms for the interpolation peak meter (or the drawing algorithm in CoolEdit) and for upsampling, if any?

Reply by bruno putzeys

Interpolation includes the use of a steep low-pass filter so adding another one doesn’t change things. The order of interpolation is the number of taps in the first filter stage. The maximum intersample peak indeed increases with tap count, albeit logarithmically.

This is only the case for this extreme test signal though. For normal audio you won’t see a difference between a normal filter and an extremely long one. The upsampling filter in an interpolating meter or side chain can remain reasonable. Upsampling by a factor of 8 and adding a tiny smidgen of headroom is all you need to be safe for all practical purposes.

The only place where one would actually consider the extreme test signal is when deciding on the accumulator word length of an upsampling filter. The sum of absolute values of the coefficients should still fit without overflowing.

Meanwhile, Back In Our Original Discussion

Reply by bruno putzeys

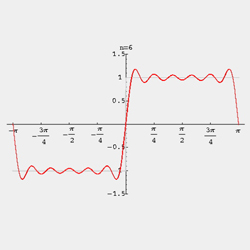

Gibbs phenomenon indeed and fundamentally you’re stuck with it. It’s not just “fact”, it’s a mathematical truth. Pre-ringing is a necessary result of having a sharp low-pass filter with a linear phase response. Actually, very old converters had no pre-ringing because they did not have linear phase.

They had tons of post-ringing instead.

Eliminating ringing, pre or post, requires shallow filters and/or non-linear-phase filters. If you still want a flat response to about 20kHz and no aliasing/imaging, this implies a higher sampling rate.

Peter Craven pointed out that in a high sampling rate system, all filters could be made sharp at fs/2 and that a single slow-roll filter starting at 20kHz then reduces the ringing. You don’t want all filters to be slow rolloff because a cascade of slow rolloff filters becomes a sharp filter so there would be no point in converter manufacturers including them as standard.