Reply by John Roberts {JR}

While I am still figuring out what I don’t know in this area, a few things may be worth considering.

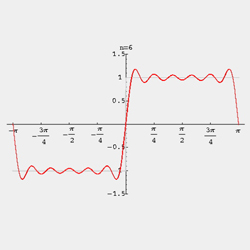

As another poster suggested, increasing the sample rate should reduce the amplitude of this phenomenon. Alternately in a “lower the water instead of raising the bridge” analogy, managing rate of change (slew rate?) of the source material should help too.

I suspect one could predict a worst case over range for a given bandpass or slew rate, and sample rate.

For perspective, perfect square waves or polarity flips aren’t commonly encountered in recording. Real world (audio) signals will be somewhat band limited by their nature, and capture/pre processing hardware.

It seems for critical mastering, if trying to push the hottest signal without clipping, this math over range could be monitored and headroom factor adjusted as needed.

This may be more hands on than typical equipment users are comfortable with. Alternately this could be run with a conservative headroom factor than a second operation follows up and normalizes the hottest peak back up to FS.

New technology always has new kinds of got’cha’s but I don’t think this is a problem unless equipment designers ignore it completely, and you spend too much time around digital full scale.

Reply by AndreasN

Time discrete sampling is made to transfer as much information as possible, not to give as much information as possible in directly readable numbers.

The numbers (small slices of time) only tell a fraction of the whole story. The rest needs reconstruction to become accessible. The solution is very simple: use reconstructed metering!

It keeps amazing me that this is not more common in mastering. Where going at or near the ceiling is a near constant part of the game. Sample point metering does not tell the peak level of the signal that is being created! So in essense, a lot of people work in the blind…

For most processing, this is easy to avoid by staying far enough away from the ceiling, -6dB or more. Natural sounds don’t have much of this problem. Digital processing though is prone to create fast changes. Changes that are too fast are easy to make, creating alias distortion as there is nowhere to go for those new high frequencies. A quick look at lot of common limiters will show you just that.

Many of the loudness tricks have a high potential for both aliasing and intersample peaks. A sine or two and an FFT like Voxengos free Span VST helps to avoid the alias buggers. By keeping an eye on both sample point levels and reconstructed level, it’s easy to steer clear of the worst intersample peak problems. Some processes are more prone to creating them than others, by watching both meters, it’s all very clear what’s going on.

Did some simple and quick testing with a limiter and SRC some time ago. It seems to indicate that 4x oversampling while limiting is enough to avoid intersample overs when combined with a .3dB ceiling. At 8x (88kHz oversampled to 4x in the Voxengo Elephant limiter), the difference between reconstructed peak meter and the sample point peak meter, is something like a tenth or two of a dB. But this is, as Bruno noted, to start in the wrong end of the problem. The correct way would be to build reconstruction into the sidechain of the limiter.