Growing up, I’d stay awake each New Year’s Eve to watch the telecast of the Times Square ball drop. It was always a bright, colorful spectacle.

In my teens, I visited Times Square in person. Standing in the middle of New York City, my gaze followed someone’s pointed finger towards the top of a distant building. “That’s where the ball drops.”

Really? How underwhelming.

Once you see it in person, you realize that the ball drop is a better presentation on TV then it is in real life. A wide-angle camera shot showing an accurate perspective – a relatively tiny object from relatively far away – would be pretty bland on TV.

You could say that the philosophy governing this production is not to seek to convey a truly accurate representation of being there in real life, but rather to create an engaging, larger-than-life viewing experience that in some ways is may be more “ideal” than the event itself.

Think about how studio recordings were made in the 1940s and 50s. The recording industry was dominated by a handful of major labels that operated their own studios, usually in New York, Chicago, and Hollywood.

Making a record basically consisted of putting the musicians in a big room and letting them play, with a couple of microphones to capture the proceedings. Philosophically, the goal was to simply record whatever it sounded like in the room. The acoustic environment of the studio was an inseparable element of the recording, which was unprocessed, un-doctored, un-romanticized – a wide shot of Times Square.

Today’s popular music recordings can take advantage of all sorts of electronic tools to enhance performances themselves – pitch correction, quantization, overdubs – and create interesting sounds and sonic environments that couldn’t possibly exist in real life. I don’t think this is inherently a bad thing, it’s just a different approach.

It’s the difference between a documentary about ancient Sparta and the movie “300.” A rich, polished production – zooming in on the Times Square ball – is what many listeners have come to expect.

Perception Vs Reality

What about live recordings? They might seem, by nature, more in line with the “old school” recording philosophy – an accurate, real account of a performance. I submit that this is not the case.

Stand in a room with a friend and have them talk. Record the speech on your smartphone. Pay careful attention to the acoustic experience in person, then put on some headphones and listen to the recording. Many people are shocked by how much reverb and reflections dominate the recording.

If the goal was simply to capture what you would have heard in the room, slap up a pair of microphones at front of house and call it a day. Lots of engineers do this regularly via a smartphone XY-pair attachment or the ubiquitous Zoom H4 and recorder. The problem is that the resulting recording bears little resemblance to actually being there. Most listeners would be disappointed by a live album of this quality.

This was there all along, but when you were in the space, your brain used directional cues from your pinna to key in on direct sound from the source and de-emphasize everything else.

Psychoacoustically, we can “refocus” our auditory perception, like eavesdropping at a party, without moving our heads. (By contrast, we have to point our eyes at whatever we wish to see. We can “listen to” but we have to “look at.”) It’s sometimes called the “cocktail party” effect.

Recording the source with a microphone short circuits our directional mechanism, and reverb becomes a nuisance. So we can therefore deduce that the “perfect amount” of reverb in a live venue (good luck with that) is not the ideal amount for a live recording of the same show.

Even in a nice recording studio, the reverberant field is what it is, and we get what we get. And I doubt a hockey arena is an optimal acoustic environment for any recording.

So my live recording strategy is to try to minimize the contribution of the actual venue acoustics and replace it with artificial reverb that suits my purposes.

This might seem dishonest, but sound engineers routinely idealize things.

Think about what we would consider the “perfect” rock kick drum sound. How many real, physical kick drums have you encountered that sound like that?

No one in their right mind would listen to a concert with their head inside a kick drum, or an inch from Steven Tyler’s mouth for that matter – but that’s exactly where we consider an optimal mic placement!

Then we add a nice reverb of our own, and we end up with a version of the source that sounds better than the actual source in the room.

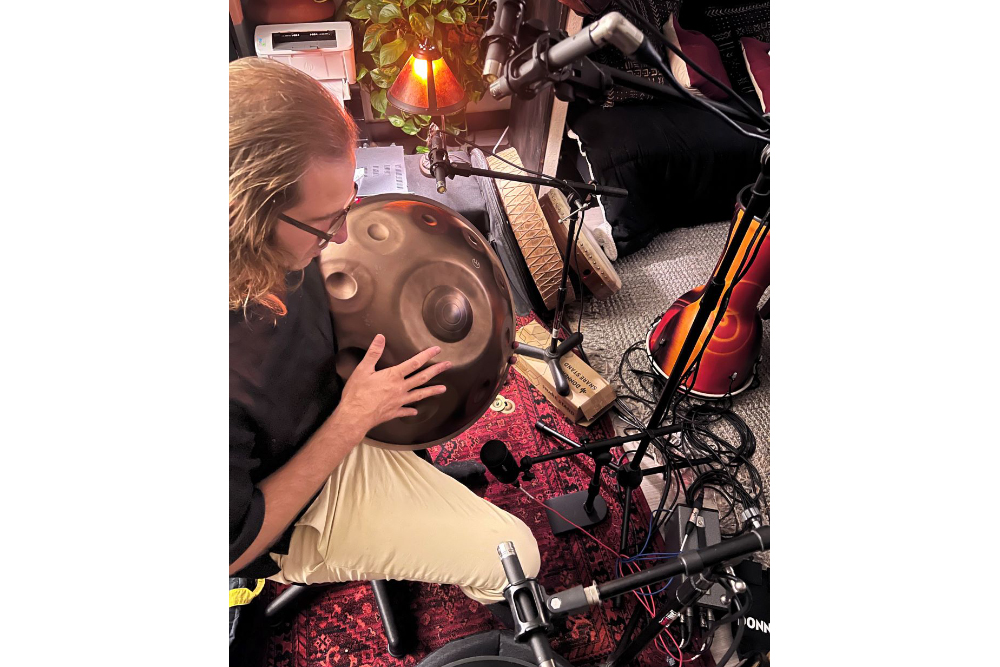

Can I Have Another (Mic)?

Once we’ve come to terms with the inherent “romantization” required to create a pleasing recording, we can look at what we need to do technically to accomplish it.

First, put a mic on everything. We will seek to minimize room sound, so we can’t count on ambience to pick up stage bleed. If we don’t mic something, we won’t have it in the final recording. We also need to capture the response of the audience, for this is the heart and soul of a live recording. I use directional condenser mics placed to reject the mains and isolate the crowd.

Further, while multi-tracking a club gig, I deployed a pair of hypercardioid large-diaphragm condensers, positioned on the downstage edge, adjacent to the main stacks. Precise aiming placed the PA in the mics’ null zones, and I was pretty surprised by the minimal bleed from the PA considering the proximity.

It helps to use a healthy number of audience mics because we want to hear people, not persons. A more representative sound will result with more mics, and there’s also a better chance of being able to edit out “screaming drunk guy” (there’s always one).

Since we want to capture the audience, not the room, my mic placements are relatively close and point away from the stage. Time alignment will be critical during mix down when we sum the audience mics in the mix. The most efficient method I know is to record-arm all the audience mics, solo the snare drum through the PA, and give it a wallop.

Now all our audience mics have a convenient transient, which we can use as an impulse response to align the mics in the DAW (editing software) by visually aligning the wave forms. This is faster and more accurate than using tape measures, laser distos (distometers), or software sample delay, in my opinion.

By the way, before you hit “record” on the DAW, do yourself a huge favor and name all of the tracks in the software. When you start to record, the software will then generate properly-named – rather than just sequentially numbered – audio files. This can save hours of frustration during the editing stage on big projects.

Getting It In Shape

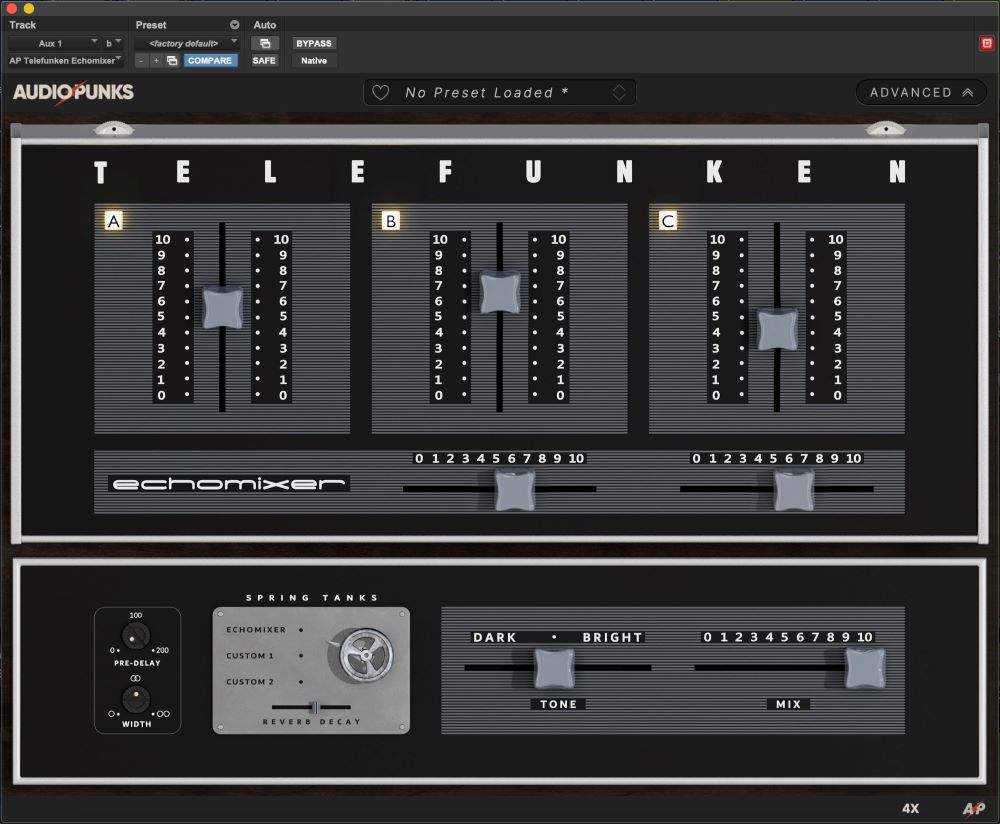

My mixdown strategy is to use the isolated direct lines from the stage mics to create a clean, full mix, just as I would in the studio. Studio engineers utilize a variety of different reverbs – often a combination of rooms, plates, and halls – for different mix elements. I do the same thing for live recording, but I route all of the reverb returns through a VCA, which will come in handy when we add in “venue” reverb later.

Audience mics are next, enough to bring the audience into the mix, but not wash it out or cloud it up. We can ride levels up between songs or when the audience becomes very responsive. I feel it’s best to do this by hand, writing a Latch automation track, rather than by using gates, which, to my ear, can sound unnatural.

Be on the lookout for what we call “wild material” – cheers, applause, and especially applause endings that decay cleanly without a band member tuning or speaking over it. This comes in handy if we later need to edit ourselves out of a tight spot.

At no point should a live recording be silent – some engineers use a looping track of room noise and audience noise – so we’ll need samples of each. Audiences make more noise after uptempo songs then after slow ones, so we’ll want wild material of both. (For more about editing and mastering live albums, see Bob Katz’s excellent “Mastering Audio: The Art and the Science,” which I consult often.)

I aggressively high-pass the audience mics, as high as 150 Hz or 200 Hz. Venues are boomy in the low frequencies, almost without exception. Remove this, and the mix tightens up. We don’t want a change in LF tone when the audience mics are ridden up and down, plus audiences are human and humans aren’t contributing anything worth keeping below 100 Hz. Get rid of it.

If we want some “space” on LF from the kick drum and bass guitar, we can use some reverb of our own. It’s humorous to me that some engineers will balk at high-passing the LF noise out of an actual room recording but will high-pass an artificial reverb return without a second thought. It’s the same thing! LF mud has ruined more than a few live recordings – don’t let it be the death of yours.

Returning To The Scene

O.K., now we have a nice clean mix that hopefully minimizes the contribution of the actual, non-ideal acoustic environment. Let’s go ahead and put it back into an ideal one of our own.

We’ll need a reverb that at least somewhat resembles the original venue – a club gig should sound like a club, and an arena gig like an arena. But we have a lot of leeway here, and we can manipulate the reverbs parameters to suit our purposes. I pay particular attention to pre-delay and the frequency response of the decay. Convolution reverbs will earn their keep in these applications.

We can really tailor the “venue reverb” to make it work for us, not against us – maybe exaggerate the space for a ballad and then dial it back a bit to tighten up an uptempo song. This is why we put “studio” reverbs on a VCA – we can easily balance them against the “venue” verb dynamically to control the overall reverberant characteristic as we subtly manipulate the room sound. If we’re pushing the venue verb a bit on a slow number, it’s easy to pull back on the other reverbs to keep our mix from washing out.

To sound realistic, I use at least one discrete reflection, basically to give the “virtual venue” a back wall. (The audience mics won’t capture a clean rear-wall reflection because they’re time-aligned to an arrival from the front, which means they’ll scatter on arrival from the rear.)

I employ a basic single-tap delay with highs and lows rolled off, and here’s the secret sauce: don’t route the delay to the main mix, but instead to the “venue” reverb. Reflective paths are reverberant, too. The “dry” delays we’re used to using sound cool as an effect but aren’t convincing in an acoustic sense.

It doesn’t take much of a reflection to imply the acoustic space, so keep it low. And we also don’t need everything in it – surprisingly, just the vocal is often enough.

Transient instruments like drums sound bad coming off the back wall, and I’ve found they’re usually not necessary to create the illusion. Listen to the excellent live album of Billy Joel at Shea Stadium, particularly “Miami 2017 (Seen The Lights Go Out On Broadway)” and study the single “rear wall reflection.” It’s very deliberate, and works well.

This is another example of our electronic approximation being superior to the real deal – I’ve never encountered a rear wall that only reflects vocals.

This is just a new iteration of the old debate over whether reinforced sound is natural. It is, but in a complimentary way – without reinforcement, artists would need to scream to be heard. That’s unnatural. With “unnatural” reinforcement, they can sing and speak in a natural way. The “unnaturalness” we bring to the table enables natural behavior of the artists.

So, in my view, a good live recording is an extension of the same concept. Only by departing from what a listener would have heard if they were actually there can we make a listener feel like they were actually there.